Why insertion throughput can be reduced with an increase of batch size?

| From: | Павел Филонов <filonovpv(at)gmail(dot)com> |

|---|---|

| To: | pgsql-general(at)postgresql(dot)org |

| Subject: | Why insertion throughput can be reduced with an increase of batch size? |

| Date: | 2016-08-22 06:53:00 |

| Message-ID: | CADcp+YCS1=EX+nNtcCWPTy3-BrT+XPJ3kwJkz_Fjp0hyvPRfKg@mail.gmail.com |

| Views: | Raw Message | Whole Thread | Download mbox | Resend email |

| Thread: | |

| Lists: | pgsql-general |

My greetings to everybody!

I recently faced with the observation which I can not explain. Why

insertion throughput can be reduced with an increase of batch size?

Brief description of the experiment.

- PostgreSQL 9.5.4 as server

- https://github.com/sfackler/rust-postgres library as client driver

- one relation with two indices (scheme in attach)

Experiment steps:

- populate DB with 259200000 random records

- start insertion for 60 seconds with one client thread and batch size =

m

- record insertions per second (ips) in clients code

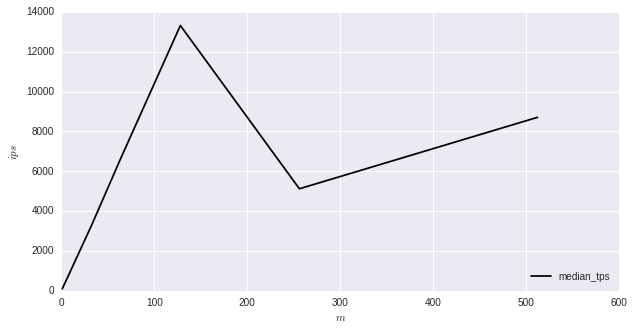

Plot median ips from m for m in [2^0, 2^1, ..., 2^15] (in attachment).

On figure with can see that from m = 128 to m = 256 throughput have been

reduced from 13 000 ips to 5000.

I hope someone can help me understand what is the reason for such behavior?

--

Best regards

Filonov Pavel

| Attachment | Content-Type | Size |

|---|---|---|

| postgres.sql | application/sql | 144 bytes |

|

image/png | 16.1 KB |

Responses

- Re: Why insertion throughput can be reduced with an increase of batch size? at 2016-08-22 20:13:04 from Jeff Janes

- Re: Why insertion throughput can be reduced with an increase of batch size? at 2016-08-23 01:02:57 from Adrian Klaver

Browse pgsql-general by date

| From | Date | Subject | |

|---|---|---|---|

| Next Message | Thomas Güttler | 2016-08-22 07:27:10 | Re: PG vs ElasticSearch for Logs |

| Previous Message | Michael Paquier | 2016-08-22 04:34:30 | Re: incorrect checksum detected on "global/pg_filenode.map" when VACUUM FULL is executed |