Memory leak in WAL sender with pgoutput (v10~)

| From: | Michael Paquier <michael(at)paquier(dot)xyz> |

|---|---|

| To: | Postgres hackers <pgsql-hackers(at)lists(dot)postgresql(dot)org> |

| Subject: | Memory leak in WAL sender with pgoutput (v10~) |

| Date: | 2024-11-29 02:05:51 |

| Message-ID: | Z0khf9EVMVLOc_YY@paquier.xyz |

| Views: | Whole Thread | Raw Message | Download mbox | Resend email |

| Thread: | |

| Lists: | pgsql-hackers |

Hi all,

This is a follow-up of ea792bfd93ab and this thread where I've noticed

that some memory was still leaking when running sysbench with a

logical replication setup:

https://www.postgresql.org/message-id/Zz7SRcBXxhOYngOr@paquier.xyz

Reproducing the problem is quite simple, and can be done with the

following steps (please adapt, like previous thread):

1) Initialize a primary and set up some empty tables:

NUM_TABLES=500

PRIMARY_PORT=5432

STANDBY_PORT=5433

sysbench oltp_read_only --db-driver=pgsql \

--pgsql-port=$PRIMARY_PORT \

--pgsql-db=postgres \

--pgsql-user=postgres \

--pgsql-host=/tmp \

--tables=$NUM_TABLES --table_size=0 \

--report-interval=1 \

--threads=1 prepare

2) Create a standby, promote it:

STANDBY_DATA=/define/your/standby/path/

pg_basebackup --checkpoint=fast -D $STANDBY_DATA -p $PRIMARY_PORT -h /tmp/ -R

echo "port = $STANDBY_PORT" >> $STANDBY_DATA/postgresql.conf

pg_ctl -D $STANDBY_DATA start

pg_ctl -D $STANDBY_DATA promote

3) Publication and subscription

psql -p $PRIMARY_PORT -c "CREATE PUBLICATION pub1 FOR ALL TABLES;"

psql -p $STANDBY_PORT -c "CREATE SUBSCRIPTION sub1 CONNECTION

'port=$PRIMARY_PORT user=postgres dbname=postgres PUBLICATION pub1 WITH

(enabled, create_slot, slot_name='pub1_to_sub1', copy_data=false);"

4) Run sysbench:

sysbench oltp_write_only --db-driver=pgsql \

--pgsql-port=$PRIMARY_PORT \

--pgsql-db=postgres \

--pgsql-user=postgres \

--pgsql-host=/tmp \

--tables=$NUM_TABLES --table_size=100 \

--report-interval=1 \

--threads=$NUM_THREADS run \

--time=5000

With that, I've mentioned internally that there was an extra leak and

left the problem lying aside for a few days before being able to come

back to it. In-between, Jeff Davis has done a review of the code to

note that we leak memory in the CacheMemoryContext, due to the

list_free_deep() done in get_rel_sync_entry() that misses the fact

that the publication name is pstrdup()'d in GetPublication(), but

list_free_deep() does not know that so the memory of the strdupped

publication names stays around. That's wrong.

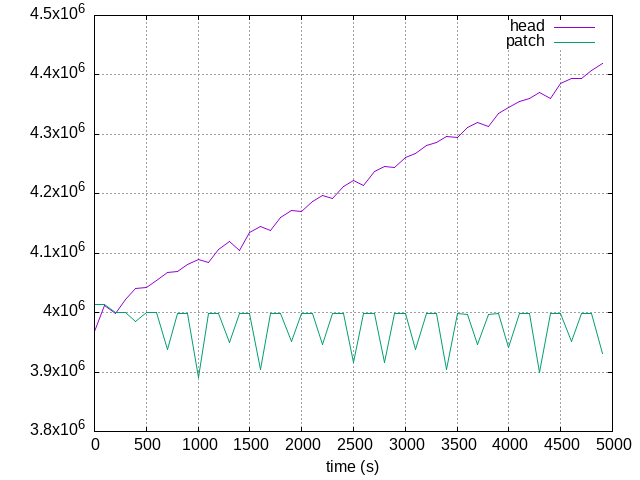

Back to today, I've done more benchmarking using the above steps and

logged periodic memory samples of the WAL sender with

pg_log_backend_memory_contexts() (100s, 50 points), and analyzed the

evolution of the whole thing. "Grand Total" used memory keeps

increasing due to one memory context: CacheMemoryContext. So yes,

Jeff has spotted what looks like the only leak we have.

Attached is a graph that shows the evolution of memory between HEAD

and a patched version for the used memory of CacheMemoryContext

(ylabel is in bytes, could not get that right as my gnuplot skills are

bad).

One thing to note in this case is that I've made the leak more

noticeable with this tweak to force the publication to be reset each

time with go through get_rel_sync_entry(), or memory takes much longer

to pile up. That does not change the problem, speeds up the detection

by a large factor:

--- a/src/backend/replication/pgoutput/pgoutput.c

+++ b/src/backend/replication/pgoutput/pgoutput.c

@@ -2061,12 +2061,13 @@ get_rel_sync_entry(PGOutputData *data, Relation relation)

List *rel_publications = NIL;

/* Reload publications if needed before use. */

- if (!publications_valid)

+ elog(WARNING, "forcing reload publications");

{

oldctx = MemoryContextSwitchTo(CacheMemoryContext);

if (data->publications)

{

list_free_deep(data->publications);

This problem exists since the introduction of logical replication,

down to v10. It would be sad to have an extra loop on publications to

free explicitely the publication names, and the issue would still be

hidden to the existing callers of GetPublication(), so I'd suggest to

change the Publication structure to use a NAMEDATALEN string to force

the free to happen, as in the attached.

That means an ABI break. I've looked around and did not notice any

out-of-core code relying on sizeof(Publication). That does not mean

that nothing would break, of course, but the odds should be pretty

good as this is a rather internal structure. One way would be to just

fix that on HEAD and call it a day, but I'm aware of heavy logirep

users whose workloads would be able to see memory bloating because

they maintain WAL senders around.

Thoughts or comments?

--

Michael

| Attachment | Content-Type | Size |

|---|---|---|

| all_used_total.csv | text/csv | 1.0 KB |

|

image/png | 29.6 KB |

| logirep_cache_leak.patch | text/x-diff | 997 bytes |

Responses

- Re: Memory leak in WAL sender with pgoutput (v10~) at 2024-11-30 03:58:44 from Michael Paquier

Browse pgsql-hackers by date

| From | Date | Subject | |

|---|---|---|---|

| Next Message | Junwang Zhao | 2024-11-29 02:15:04 | Re: Make COPY format extendable: Extract COPY TO format implementations |

| Previous Message | David Rowley | 2024-11-29 02:02:39 | Re: Remove useless GROUP BY columns considering unique index |