Re: generic plans and "initial" pruning

| From: | Tomas Vondra <tomas(at)vondra(dot)me> |

|---|---|

| To: | Amit Langote <amitlangote09(at)gmail(dot)com>, Robert Haas <robertmhaas(at)gmail(dot)com> |

| Cc: | Alvaro Herrera <alvherre(at)alvh(dot)no-ip(dot)org>, Andres Freund <andres(at)anarazel(dot)de>, Daniel Gustafsson <daniel(at)yesql(dot)se>, David Rowley <dgrowleyml(at)gmail(dot)com>, PostgreSQL Hackers <pgsql-hackers(at)postgresql(dot)org>, Thom Brown <thom(at)linux(dot)com>, Tom Lane <tgl(at)sss(dot)pgh(dot)pa(dot)us> |

| Subject: | Re: generic plans and "initial" pruning |

| Date: | 2024-12-01 18:36:21 |

| Message-ID: | 54c35fb9-da3a-4754-ab8c-46ed0b612465@vondra.me |

| Views: | Whole Thread | Raw Message | Download mbox | Resend email |

| Thread: | |

| Lists: | pgsql-hackers |

Hi,

I took a look at this patch, mostly to familiarize myself with the

pruning etc. I have a bunch of comments, but all of that is minor,

perhaps even nitpicking - with prior feedback from David, Tom and

Robert, I can't really compete with that.

FWIW the patch needs a rebase, there's a minor bitrot - but it was

simply enough to fix for a review / testing.

0001

----

1) But if we don't expect this error to actually happen, do we really

need to make it ereport()? Maybe it should be plain elog(). I mean, it's

"can't happen" and thus doesn't need translations etc.

if (!bms_equal(relids, pruneinfo->relids))

ereport(ERROR,

errcode(ERRCODE_INTERNAL_ERROR),

errmsg_internal("mismatching PartitionPruneInfo found at

part_prune_index %d",

part_prune_index),

errdetail_internal("plan node relids %s, pruneinfo

relids %s",

bmsToString(relids),

bmsToString(pruneinfo->relids)));

Perhaps it should even be an assert?

2) unnecessary newline added to execPartition.h

3) this comment in EState doesn't seem very helpful

List *es_part_prune_infos; /* PlannedStmt.partPruneInfos */

5) PlannerGlobal

/* List of PartitionPruneInfo contained in the plan */

List *partPruneInfos;

Why does this say "contained in the plan" unlike the other fields? Is

there some sort of difference? I'm not saying it's wrong.

0002

----

1) Isn't it weird/undesirable partkey_datum_from_expr() loses some of

the asserts? Would the assert be incorrect in the new implementation, or

are we removing it simply because we happen to not have one of the fields?

2) inconsistent spelling: run-time vs. runtime

3) PartitionPruneContext.is_valid - I think I'd rename the flag to

"initialized" or something like that. The "is_valid" is a bit confusing,

because it might seem the context can get invalidated later, but AFAICS

that's not the case - we just initialize it lazily.

0003

----

1) In InitPlan I'd move

estate->es_part_prune_infos = plannedstmt->partPruneInfos;

before the comment, which is more about ExecDoInitialPruning.

2) I'm not quite sure what "exec" partition pruning is?

/*

* ExecInitPartitionPruning

* Initialize the data structures needed for runtime "exec" partition

* pruning and return the result of initial pruning, if available.

Is that the same thing as "runtime pruning"?

0004

----

1) typo: paraller/parallel

2) What about adding an assert to ExecFindMatchingSubPlans, to check

valisubplan_rtis is not NULL? It's just mentioned in a comment, but

better to explicitly enforce that?

2) It may not be quite clear why ExecInitUpdateProjection() switches to

mt_updateColnosLists. Should that be explained in a comment, somewhere?

3) unnecessary newline in ExecLookupResultRelByOid

0005

----

1) auto_explain.c - So what happens if the plan gets invalidated? The

hook explain_ExecutorStart returns early, but then what? Does that break

the user session somehow, or what?

2) Isn't it a bit fragile if this requires every extension to update

and add the ExecPlanStillValid() calls to various places? What if an

extension doesn't do that? What weirdness will happen? Maybe it'd be

possible to at least check this in some other executor hook? Or at least

we could ensure the check was done in assert-enabled builds? Or

something to make extension authors aware of this?

Aside from going through the patches, I did a simple benchmark to see

how this works in practice. I did a simple test, with pgbench -S and

variable number of partitions/clients. I also varied the number of locks

per transaction, because I was wondering if it may interact with the

fast-path improvements. See the attached xeon.sh script and CSV with

results from the 44/88-core machine.

There's also two PDFs visualizing the results, to show the impact as a

difference between "master" (no patches) vs. "pruning" build with v57

applied. As usual, "green" is good (faster), read is "bad" (slower).

For most combinations of parameters, there's no impact on throughput.

Anything in 99-101% is just regular noise, possibly even more. I'm

trying to reduce the noise a bit more, but this seems acceptable. I'd

like to discuss three "cases" I see in the results:

1) bad #1

IIRC the patch should not affect results for "force_custom_plan" cache

mode (and "auto", which does mostly the same thing, I think). And for

most runs that's true, with results ~100% of master. But there's a

couple curious exceptions - e.g. results for 0 partitions and 16 locks

show a consistent regression of ~10% (in the "-M prepared" mode).

I'm not terribly worried about this because it only shows for 16 locks,

and the default is 64. If someone reduces this GUC value, they should

expect some impact.

Still, it only shows in the "auto" case. I wonder why is that. Strange.

2) bad #2

There's also a similar regression in the "force_generic_plan" without

partitions (with "-M prepared"). This seems more consistent and affects

all the lock counts.

3) good

There's an area os massive improvements (in the 2-200x range) with 100+

partitions. The fast-path patch helped a bit, but this is much better,

of course.

costing / auto mode

-------------------

Anyway, this leads me to a related question - not quite a "bug" in the

patch, but something to perhaps think about. And that's costing, and

what "auto" should do.

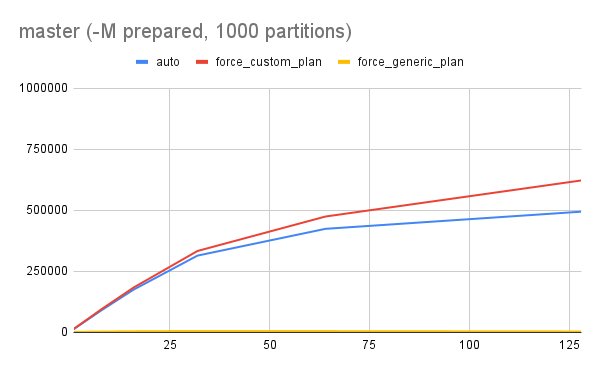

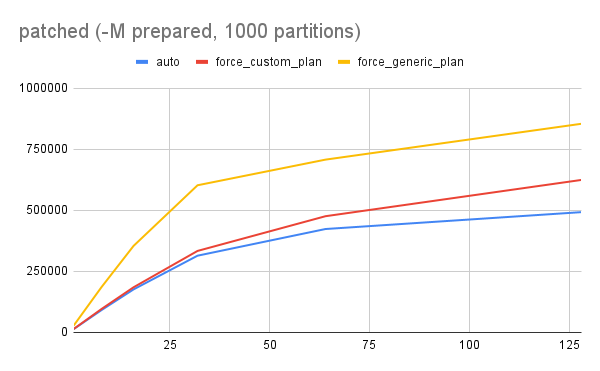

There are two PNG charts, showing throughput for runs with -M prepared

and 1000 partitions. Each chart shows throughput for the three cache

modes, and different client counts. There's a clear distinction between

"master" and "patched" runs - the "generic" plans performed terribly, by

orders of magnitude. With the patches it beats the "custom" plans.

Which is great! But it also means that while "auto" used to do the right

thing, with the patches that's not the case.

AFAIK that's because we don't consider the runtime pruning when costing

the plans, so the cost is calculated as if no pruning happened. And so

it seems way more expensive than it should ... and it loses with the

custom scans. Is that correct, or do I understand this wrong?

Just to be clear, I'm not claiming the patch has to deal with this. I

suppose it can be handled as a future improvement, and I'm not even sure

there's a good way to consider this during costing. For example, can we

estimate how many partitions will be pruned?

regards

--

Tomas Vondra

| Attachment | Content-Type | Size |

|---|---|---|

| xeon-complete.pdf | application/pdf | 67.5 KB |

| xeon-prepared.pdf | application/pdf | 34.8 KB |

| xeon.csv.gz | application/gzip | 152.7 KB |

| xeon.sh | application/x-shellscript | 1.7 KB |

|

image/png | 18.5 KB |

|

image/png | 23.5 KB |

In response to

- Re: generic plans and "initial" pruning at 2024-10-25 12:30:24 from Amit Langote

Responses

- Re: generic plans and "initial" pruning at 2024-12-04 13:34:16 from Amit Langote

Browse pgsql-hackers by date

| From | Date | Subject | |

|---|---|---|---|

| Next Message | Dmitry Dolgov | 2024-12-01 19:00:14 | Re: CRC32C Parallel Computation Optimization on ARM |

| Previous Message | Andres Freund | 2024-12-01 18:23:53 | Re: cannot to compile extension by meson on windows |